2.5 GenAI Prompting

Robin L. Potter

A prompt is how you communicate with genAI applications. You can prompt using voice, text, images, and reference documents. You can also use verbal and visual mode involving two-way verbal interactions and an AI camera access. Regardless of the mode, remember that a prompt is one essential way for humans to maintain agency in the process of using genAI applications. It is the way humans can direct the tools to provide output that serves human purposes.

When you direct a genAI application to complete a search or to create a document, message, or image, you are engaging in an opportunity to have control over the kind of information included in the output. To exercise this control, you must apply your critical thinking, planning, and analytical skills because the more specific the prompt and your follow up, the better you will be able to direct the model to deliver the output that serves your purpose. Given the increasing persuasive capabilities of the models (Matz et al., 2024), it is imperative that users know how to engage proactively and intentionally with AI tools so that accountability is clearly in the hands of the human and not the machine. For this and many other reasons, it is important to know how to prompt and analyze output. For more on this imperative, see Miao and Holmes (2023).

This chapter will cover prompting strategies. To get started, view this video by Ethan Mollick and Lilach Mollick (2023) of the Wharton School at the University of Pennsylvania, which covers GenAI prompting basics.

Prompting Modes

Prompt crafting is the process by which you create statements, direction, or questions that guide the genAI application in responding in a focused and purposeful manner. A prompt is the statement, direction, or question that initiates the response. This prompt may or may not be accompanied by an image, reference text, or a data set that serves as the knowledge source that informs the model’s activity and limits the context from which it draws information.

Though prompt crafting is similar to prompt engineering, prompt crafting is the activity in which most end users engage; whereas, prompt engineering is mostly performed by those with the technical skills to refine the model’s ability to process prompts or by those with advanced prompting skills who want to tighten or limit the model’s mode of response. Ramlochan (2024) mentions that prompt engineering is conducted by those with specialized encoding skills. Unfortunately, as of this writing, many who communicate on the topic of prompting do not differentiate between the two terms.

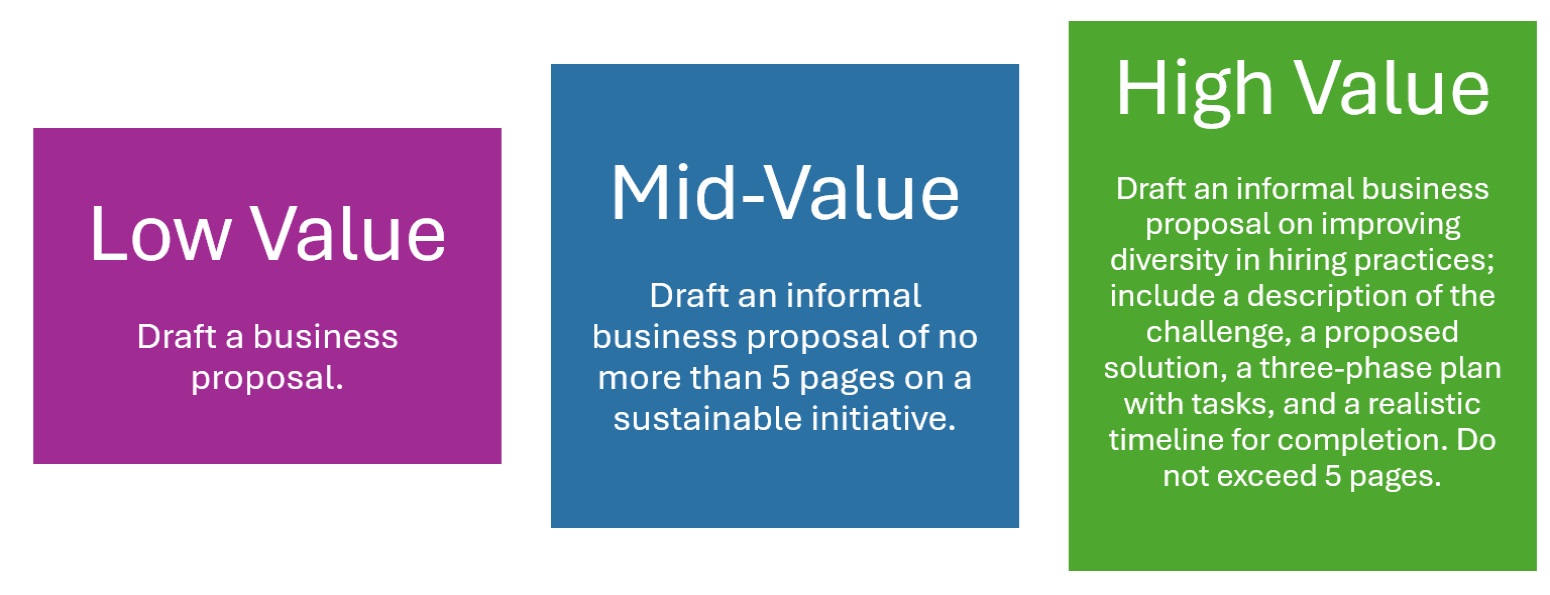

How you go about the process of prompting is up to you: You may choose to elicit an output all at once with one prompt, or you may choose to break up the prompting task into phases, proceeding in a step-by-step manner. You may also choose to create a prompt that directs the application itself to proceed using a phased approach. We can categorize prompting into three types:

- Casual

- Conversational

- Structured

With casual prompting, you can simply ask a question or give a command. This is the easiest form of prompting using a brief one or two-sentence format. You can also elicit an output using conversational prompts in which you engage in a back-and-forth type of conversation or using a question and answer approach in order to refine the focus of the output. Conversational prompts may also consist of considerable detail and a reference document. Structured prompts may consist of several paragraphs and examples created to specifically direct the application to create a more narrowed and purpose-driven output that addresses an identified problem.

With verbal and audio functions, your interactions with an LLM can very much resemble the kind of conversation you would have with any person. When you use voice, the LLM will respond to you using both voice and text. For example, OpenAI’s GPT 4o (o for “omni”) and Microsoft’s Copilot can engage with you in verbal interactions that simulate real life conversations in terms of cadence, tone, content, and pace. Paired with the camera access, the AI can also interact with the world around it as you communicate.

Those who feel timid about prompting can choose to adapt prompts created by others. Some organizations will have their own prompt libraries where you will find prompts relevant to company goals and situations. These prompt libraries may be created to aid with efficiencies such as helping employees avoid duplication or to maintain certain standards. You may also choose to ask the genAI tool to improve a prompt for you by giving it a draft version and some basic information. As you build confidence in prompting, though, you will notice that creating your own will result in output that is more aligned to your purpose and context thus saving you time in the long run.

Your preferred method(s) will develop over time, and with experience they will become adaptable to the context and purpose at hand. Whichever approach you choose, always consider the output as draft material that will require your thorough review before use in your communications.

See two examples of simple prompts below. Example 2 has been created using an enterprise version of Copilot in Creative mode, which gives access to ChatGPT 4.

Examples of Simple Prompts

Example 1

Review the following draft email message written to employees at a mid-sized business and provide suggestions on how it can be improved. Offer suggestions to improve the approach, idea development, tone, organization, and inclusivity. Suggest any other additional content that would be useful to achieve the stated purpose.

[The user would input the draft message followed by the prompt asking for a review by the LLM, which would then respond with suggestions for improvement.]

Example 2

A brief, mid-value business-related prompt submitted to Copilot:

Draft an email message to colleagues reminding them of the recent company sustainability initiative that encourages car pooling. You should mention the benefits and include an incentive.

Draft email message created by Copilot using the Professional mode on January 23, 2024.

Subject: Carpooling for a greener future

Dear colleagues,

I hope this email finds you well. I am writing to remind you of the recent company sustainability initiative that encourages car pooling among employees. As you may already know, carpooling is a great way to reduce your carbon footprint, save money on gas and parking, and make new friends. It also helps to ease traffic congestion and improve air quality in our city.

To support this initiative, our company is offering a special incentive for carpoolers. For every week that you carpool with at least one other colleague, you will receive a $10 gift card that you can use at any of our partner stores or restaurants. You can also earn extra points for your team in the monthly wellness challenge.

To join the carpooling program, please register on the company intranet and fill out a short survey about your preferences and availability. You will then be matched with other colleagues who live near you and have similar schedules. You can also create your own carpool group if you already have some potential partners in mind.

If you have any questions or concerns about the carpooling program, please feel free to contact me or the HR department. We are happy to assist you and provide more information.

Thank you for your participation and commitment to making our company more sustainable and environmentally friendly.

Sincerely,

Your name

Note how the resulting draft output in the second example goes beyond what is requested (see the text highlighted in blue) by also including information about how to register for the car pooling program. In this particular example, the additional information is useful and would probably be included in the final copy. However, this added information is also an example of how the LLM can include content that is not requested and that, without careful review, could send your document’s intent into a direction that may not work with your purpose. As such, this example illustrates the necessity for a careful review and edit of the output.

Prompt Elements

Various practitioners have developed strategies for creating prompts and with experience have identified the most effective methods for prompting. Ethan Mollick (November 2023) offers the best advice: “You can become a world expert in the application of AI to your domain by just using AI a lot until you figure out what it is good and bad at.” His best advice is to not complicate things. As an example, Mollick offers the following prompt: “I need to write an email to a teacher for an extension. help” (November 2023). It can be that simple. Most prompts created for business purposes, however, will require more information.

Whatever approach you use, adopting skillful and thoughtful methods that are adapted to changing contexts and purposes will save you time and result in usable outputs. Completing a careful problem analysis including your purpose, audience, and context, as discussed in Chapter 2.4 on Choosing and Using GenAI, is the first step in effectively creating your prompting strategy. Eventually, with experience and experimentation, you will find a strategy that works best for you and your routine needs.

Essentially, prompts will contain any, all, or a combination of the following genAI cues (OpenAI, n.d.); what information you choose to include in prompts should be governed by your organization’s privacy and confidentiality policy along with your purpose and audience needs. Whatever you do, be flexible and modify your prompting as the process unfolds. You are not going for the perfect prompt; rather, you just want the one or the sequence that works.

-

- Role: Roles can be delineated in two ways depending on the LLM you are working with. You can give the model a role (“You are the manager of a small accounting department in a local supermarket”; “You are a tutor specializing in the subject of finance”), or you can identify your role (“I am an office assistant at a building engineering company”). Identifying either your role or that of the LLM itself will help the application understand the range of responsibilities and knowledge from which it will draw for its response and how it will interact with you. Note that no actual company identifiers are included; this is to align with the need to maintain confidentiality especially if you are not using an enterprise version of the LLM.

- Context: Context can be addressed in several ways: you may want to do any of the following, whatever the context calls for:

-

- Explain the overall situation or circumstance that is creating the need for the prompt.

- Describe the type of business, department, client, institution, etc.

- Describe the communication strategy. For example, are you creating a series of social media posts, direct mailing, meeting collateral?

- Explain a complication, challenge, opportunity, etc.

-

Again, for confidentiality reasons, while being specific avoid identifying actual people, companies, and places.

3. Purpose or goal: Clearly state the goal you want to achieve in terms of any one or a combination of the following types:

-

-

- Desired output: State what you want the LLM to produce for you in terms of document type or content. Do you want the model to create a draft table or chart, or a draft email message, proposal, or other document? Do you want it to draft content for a presentation?

- Communication intent: Describe your communication objective: Do you want to persuade, direct, or inform? Do you want to request time away from the office to catch up on a backlog of work? Do you need to persuade a room full of executives to accept your proposed 4-day work week? Are you informing employees about a change to their critical illness insurance plan?

-

4. Information required: Include key information that will enable the LLM to further narrow its activity. Be specific. Include relevant data. If necessary include information about the organization’s sustainability, inclusion, and other policies. Consider uploading a reference document or case study from which the model can cull information (Mascellino, 2023), or if working within the Microsoft Copilot enterprise environment, allow the system to access relevant reference documents (if the administrator has made this possible). You want to provide enough information to ensure that the model will create output that aligns with your needs.

5. Example: You can also include examples for what you are looking for in terms of output. If you already have a sample sales letter, for example, but need it updated for a more current need, you can upload the sample document (again only if the organization’s confidentiality policy allows) and ask the LLM to update it given more current information you provide. Note that uploading the document before adding the prompt seems to result in better output.

6. Steps: You can direct the model on how to proceed:

-

-

- You can break up your prompt into a set of steps and insert prompt segments sequentially following model responses.

- You can instruct the LLM itself to respond in a step-by-step manner so the information is organized and sequential.

- You can instruct the application to work in a phased manner such that certain information is released before other information (Mollick & Mollick, September 2023; Mollick, November 2023)

-

Using Delimiters

Delimiters are notations that users add to prompts to clearly delimit specific content like examples or reference text. Here is an example from OpenAI (n.d.):

Prompting for Tables and Graphs

One of the great benefits of using an LLM in document preparation is the amount of time it can save as you analyze and prepare various elements for your documents including tables and graphs.

Analyzing content: LLMs can be used to analyze charts, graphs, and tables in a similar way that it can for images and text. You would begin by uploading the visual aid that you want analyzed, then create a prompt that directs the model on exactly what type of information you want it to extract from the charts, graphs, or tables. In addition, you can upload a data set of any size using Advanced Data Analysis (formerly Code Interpreter–see below) and direct the tool to analyze or extract data according to specific instructions. Again, the more precise your prompt, the more useful the output will be.

Creating tables: First, think through the data and table elements that you would like the application to include, then create a specific prompt telling the LLM exactly how you want that data to appear in your table. Here is an example using the following prompt:

Prompt: Create a table comparing Bing Chat, ChatGPT, Gemini, Llama 2, and Claude using these criteria: ease of use, accuracy, safety, and cost to run; include verifiable citations and a list of references in APA style. (Prompt Information: ChatGPT 3.5, free version, February 14, 2024)

Prompting for Image Creation

Several diffusion models, such as Midjourney and Stable Diffusion, have become known for creating stand-out images that fall anywhere between total fantasy to complete realism. Initially, users had to have some specific knowledge related to photography in order to prompt for photo-realistic image outputs. However, these models, along with Copilot and ChatGPT—both of which access OpenAI’s DALL-E image generator—have evolved such that a user need only provide a detailed description using plain language for any image to be rendered.

Prompting strategies for image creation must include highly descriptive language if users want to direct the application towards producing images true to purpose. The descriptions should direct the application in any or a combination of the following areas—among so many others:

- Background and foreground

- Contextual signifiers (places, landmarks, time, seasons)

- Cultural imagery

- Degree of realism

- Diversity and inclusion

- Historical imagery (historical sites, monuments)

- People (roles, activity, relationships)

- Photographic qualities (realistic, painterly, cartoony)

- Social situations

- Sustainability cues

- Symbolism

- Technological contexts

Compare the following prompts and image examples:

Prompt 1: Create an image of a family of pink elephants out on a walk. (Copilot, February 14, 2024)

Prompt 2: Now create a realistic image of a family of four pink elephants on a hike; they are exploring a mountainous area in October; in the background there are mountains, in the foreground there is a meadow, and trees all around. (Copilot, February 14, 2024)

As you can see, the more concrete and specific information you provide, the better the image will be. This version includes the requested elements of the landscape and season indicators. Now consider a situation in which the representation of diversity is key in promoting inclusivity and opportunity for all in workplace settings:

Prompt 3: Create an image of three legal professionals consulting about a case. (Copilot, February 14, 2024)

The four images created by the model depicted two men and one woman in the image, suggesting that legal professionals are a group comprised mostly of men. In the image shown here, diversity has somewhat been represented. Note the extra (seventh) hand on the shoulder and how the hands have been situated, some in not a quite appropriate manner for a workplace setting. LLMs are known to have difficulties representing hands; take this as one example of how vigilance in image review is necessary. Now, let’s push the model to depict more inclusion:

Prompt 4: Create a realistic image of legal professionals representing various racial, disabled, and gendered groups. The professionals should be consulting with each other on a case in a legal office that is modestly appointed and well lit. The image must be realistic. (Copilot, February 14, 2024)

Though this sample image gets the diversity and inclusivity right, it could include more lighting. And though realism is requested twice, the model was not able to produce a more realistic image. While this example is enough to illustrate the power of prompting that is descriptive and specific, it also emphasizes the importance of prompting specifically for diversity and inclusion if you want it represented in the imagery you incorporate into your documents.

Exercise: Image Prompt

Knowledge Check

Tips for Prompting Efficiently

The following tips will help you keep on track as you develop your prompting skills (adapted from Durham College, 2023):

- Be Specific: If you ask a vague question, you may get a vague answer. The more detail you provide, the better the response the model will be able to provide.

- State Your Intent: If there’s a specific purpose for your question, state it in the prompt.

- Direct the Output Format: If you want the answer in a specific format, state it in your prompt. Do you want a table or an image? A paragraph? A step-by-step process?

- Ask Follow-Up Questions: If the response wasn’t what you expected, or if you need more information, ask follow-up questions to clarify and get to what you need.

- Experiment with Different Phrasings: If you’re not getting the response you want, try asking the question in a different way. The model might understand one phrasing better than another.

- Prompt for Diversity and Inclusion: Ensure that the text and image outputs reflect the diversity of our society and promote inclusion.

What to Watch For

Prompting is only one step in the process of obtaining usable content. Remember that you are accountable for the accuracies and inaccuracies, the biases, and the citations of the LLM output you include in your documents. Once you have draft output to work with, routinely go through the content to avoid these issues:

- Biases: Ensuring that your documents and images are inclusive of all groups entails a careful screening of LLM output for racial, sexual, age-related, religious, and socioeconomic biases. These biases perpetuate inequities in society and in organizations, which you would want to avoid doing. These inequities affect how people relate to each other as well as how they can do their jobs, their opportunities and remuneration. Your goal should be to ensure that these inequities are not perpetuated. Images in particular display a lack of diversity. When creating your prompt, be sure to specify that any people represented in the images represent diverse groups.

- Disengagement or “falling asleep at the wheel”: Dell’Aqua (n.d.) has discovered through research that many employees tend to simply use LLM output as is, without taking the time to check the contents. While LLM outputs are continuously improving in quality, the times when inaccuracies, hallucinations, biases, and unsupported claims can appear are substantial enough to significantly damage a company’s reputation and an employee’s career if the output is used without careful review. Always become engaged with the output to maintain your agency in the process of content creation.

- Errant symbolic referents: Images will contain elements with symbolic meaning that will influence how a person reacts to the image and to your overall message. Think for example, how the color red embodies different meanings for various cultures and occasions. Being sensitive to what the image can represent to your audience can help you keep a tight focus on your messaging. Especially when using images to support information in your documents, ensure that all the imagery aligns with your purpose and contains no elements that could potentially cause offense. Checking images requires scrutiny.

- Inaccuracies or hallucinations: Although each successive versions of the genAI models show some improvement in accuracy with fewer outright hallucinated or fake (obviously made-up) information, the output must be checked for accuracy. You want to ensure that the LLM content you use aligns with your specific purpose and contains verifiable facts and information for which you can otherwise ascertain as being accurate.

- Privacy violation: Check the output for content that can compromise privacy. Enterprise LLMs are set up to protect the privacy of the users. However, if you are using free versions of non-enterprise LLMs, private information that had been included in training data or as input in chats can reappear in output. Ensure that the information in the output does not contain private personal, medical, financial or other such information.

- Sandbagging: Sometimes genAI models will perform beyond your expectations; at other times the output will be underwhelming. Depending on which application you use, the LLM may respond only to the limits established by the knowledge you exhibit in your prompt, and no further. So while many think that an LLM will automatically augment knowledge generation capabilities watch for output that will only match your ability and knowledge and not more. This potential response on the part of the LLM emphasizes the need for users to not only create specific prompts, but also to follow up on output that is less than satisfactory with a request that the LLM stretch the limits of the current output. Use your critical thinking skills to not only analyze the output but to also refine prompts to obtain correct, narrow, and specific output that meets your purpose at the degree of expertise that is required. And if the application you are using does not perform as expected, choose a different one. For more information on sandbagging, see Section C in Perez et al. (2022).

- Sycophancy: GenAI will sometimes behave in a manner that aims to obtain your approval. “When faced with complex inquiries, language models will default to mirroring a user’s perspective or opinion, even if the behavior goes against empirical information” (Sponheim, 2024). This can be a deceptive behavior that can mislead you to agreeing with assumptions and statements it is making even though they may not be accurate or in your best interest. This behavior originates in the human reinforcement training process when rewards are given for some behaviors and information and not others. The model wants a positive rating, so it will “lie” to obtain a good rating. (See also Perez, 2022).

- Unsupported claims: As a rule, do not use LLMs for research unless they are made specifically for that purpose, such as Perplexity, Elicit, Consensus, ResearchRabbit, and other such tools. ChatGPT, for example, is great for ideation (e.g., “list some topic areas in the field of sustainability”), but it is not a research engine and often responds with output that contains unreliable information. GenAI output obtained from chat models will often contain claims that are not supported with sources, or as in the case of Copilot, may include sources that are not relevant. Copilot sometimes copies from internet sources as well, so beware of using plagiarized content! Always ensure that claims are supported with verifiable sources by doing the research. Also ensure that the claims are not taken out of context: They must be true to the intent of the original document from which they originated.

Prompting has become an essential skill, so learning various strategies to communicate and direct genAI applications will work to your benefit. Using prompt elements will help you obtain more specific and purposeful output, thus saving you time. Eventually, you will become more comfortable using the technology and will even integrate into ideation, exploration, and inquiry activities.

References

Acar, O. A. (2023, June 8). AI prompt engineering isn’t the future. Harvard Business Review. https://hbr.org/2023/06/ai-prompt-engineering-isnt-the-future

Carrigan, J. (2023, Spring). From Al-driven teaching: Revolutionize your classroom with Bing and ChatGPT. Durham College. How to Write a Prompt to Engage with Generative AI | CTL (durhamcollege.ca)

Center for Faculty Development and Teaching Innovation. (2023). Prompting techniques, formulas, and examples. Using GenAI tools – prompts and copyright considerations – Generative Artificial Intelligence in Teaching and Learning (pressbooks.pub) Centennial College. CC by 4.0

Cumming, L. (2023, July 24). Why you shouldn’t be writing a new prompt every time. A case for a structured approach to prompting using generative AI. Why You Shouldn’t Be Writing A New Prompt Every Time (isophist.com)

Dell’Acqua, F. (n.d.) Falling asleep at the wheel: Human/AI collaboration in a field experiment on HR recruiters. Falling+Asleep+at+the+Wheel+-+Fabrizio+DellAcqua.pdf (squarespace.com)

Downing, C. (2023, September 18). How to get started with ChatGPT Advanced Data Analysis. https://youtu.be/SzTjGAO6fKo?si=lOQoCOJK7TqVSYD9

Durham College. (2023). How to Write a Prompt to Engage with Generative AI | CTL (durhamcollege.ca) The Center for Teaching and Learning. CC BY-NC-SA 4.0

Gewirtz, D. (2023, November 17). How to use ChatGPT to make charts and tables | ZDNET

Mascellino, A. (2023, September 27). How to Write AI Prompts: ChatGPT, Bard, Bing & More [+examples] (techopedia.com) Techopedia.

Matz, S.D., Teeny, J. D., Vaid, S. S., Peters, H., Harari, G. M., and Cerf, M. (2024). The potential of generative AI for personalized persuasion at scale | Scientific Reports (nature.com)

Miao, F. and Holmes, W. (2023). 5.2 A ‘human-centred and pedagogically appropriate interaction’ approach. Guidance for generative AI in education and research. UNESCO. unesdoc.unesco.org/ark:/48223/pf0000386693/PDF/386693eng.pdf.multi

MIT Sloane. (n.d.). How to Use ChatGPT’s Advanced Data Analysis Feature – MIT Sloan Teaching & Learning Technologies

Mollick, E. (2023, November 1). Working with AI: Two paths to prompting. Don’t overcomplicate things. Working with AI: Two paths to prompting – by Ethan Mollick (oneusefulthing.org)

Mollick, E. and Mollick, L. (2023). Practical AI for instructors and students Part 3: Prompting AI. https://youtu.be/wbGKfAPlZVA?si=DmccyjOZ-Eyndidv

OpenAI. (n.d.). Prompt engineering – OpenAI API

OpenAI. (2024, February 13). Memory and new controls for ChatGPT (openai.com)

Perez, E., et al. (2022). Discovering language model behaviors with model-written evaluations. 2212.09251.pdf (arxiv.org) Via Ethan Mollick (January 2024) LinkedIn post. Ethan Mollick on LinkedIn: I have been thinking about this paper on AI “sandbagging” (when the AI… | 84 comments

Ramlochan, S. (2023). Conversational Prompting in Generative AI (promptengineering.org) Prompt Engineering Institute.

Ramlochan, S. (2024). Conversational vs Structured Prompting (promptengineering.org) Prompt Engineering Institute.

Shapiro, Dan. (n.d.). Glowforge JD. Job Description Prompt – Google Docs Via Ethan Mollick (June 20, 2024).Latent Expertise: Everyone is in R&D – by Ethan Mollick (oneusefulthing.org)

Sponheim, C. (2024). Sycophancy in Generative-AI Chatbots Neilson and Norman Group.

GenAI Use

Chapter review exercises were created with the assistance of CoPilot.