Unit 17: GenAI Terminology: A Primer

Introduction

Review the following genAI terminology to become familiar with not only the vocabulary but also the components and processes that comprise this technology. The more familiar you become with these elements, the more confident you will feel in working with the tools.

The following glossary is offered in two parts: The GenAI System and Usage. The GenAI System glossary has been largely adapted from the Glossary of Artificial Intelligence Terms for Educators (Ruiz & Fusco, 2024) and follows a non-alphabetic order as the items are listed in logical sequence. The Usage glossary has been compiled alphabetically mainly from the University of British Columbia’s Glossary of GenAI Terms. Both glossaries have been supplemented with information from other sources as indicated.

Glossary: The GenAI System

Artificial Intelligence (AI): AI is a branch of computer science. AI systems use hardware, algorithms, and data to create “intelligence” to do things like make decisions, discover patterns, and perform some sort of action. AI is a general term, and there are more specific terms used in the field of AI. The term is also more broadly used to refer to applications. AI systems can be built in different ways, two of the primary ways are: 1) through the use of rules provided by a human (rule-based systems); or 2) with machine learning algorithms. Many newer AI systems use machine learning (see definition of machine learning below).

Algorithm: Algorithms are the “brains” of an AI system and what determines decisions. In other words, algorithms consist of the rules for the actions the AI system takes. Machine learning algorithms can discover their own rules (see Machine Learning for more) or be rule-based where human programmers give the rules.

Artificial Narrow Intelligence (ANI): AI can solve narrow problems through what is known as artificial narrow intelligence (ANI); it is used mostly for specific, task-focused purposes. For example, a smartphone can use facial recognition to identify photos of an individual in the Photos app, but that same system cannot identify sounds. ANI exists in much of the technology that we use daily.

Artificial General Intelligence (AGI): Artificial general intelligence (AGI) is predicted to be available by 2026 (Schroeder, 2024). It will be capable of completing human tasks and exercising higher order cognitive abilities, including: exercising abstract thinking, applying background knowledge, exercising common sense, determining cause and effect, and transferring learning (Hashemi-Pour & Lutkevish, n.d.). The range of capabilities of the technology will greatly extend what current artificial intelligence is capable of doing.

Artificial Super Intelligence (ASI): Building on the capabilities of AGI, artificial super intelligence (ASI) is thought to be an evolution that would see AI surpass human intelligence, acting autonomously in all spheres of activity. “These machines would feature highly advanced reasoning, decision-making, and problem-solving capabilities far beyond the creative or logical capabilities of any human being” (Keary, 2024), which will have crucial implications for humanity.

Generative AI (GenAI) and chat-based generative pre-trained transformer (ChatGPT) models: A system built by OpenAI with a neural network transformer type of AI model that works well in natural language processing tasks (see definitions for Neural Networks and Natural Language Processing below). In this case, the model: 1) can generate responses to questions (Generative); 2) was trained in advance on a large amount of the written material available on the web (Pre-trained); and 3) can process sentences differently than other types of models (Transformer).

Transformer models: Used in genAI (the T in GPT stands for Transformer), transformer models are a type of language model. They consist of neural networks and are classified as deep learning models. They give AI systems the ability to determine and focus on important parts of the input and output.

Computer Vision: Computer vision is a set of computational challenges concerned with teaching computers how to understand visual information, including objects, pictures, scenes, and movement (including video). Computer vision (often thought of as an AI problem) uses techniques like machine learning to achieve the goal of understanding visual information.

Data: Data are units of information about people or objects that can be used by AI technologies.

Training Data: Training data is used to train the algorithm or machine learning model. ChatGPT, for instance, was trained on massive datasets and information available on the internet. Evaluating training data is important because the wrong data can perpetuate systemic biases. Algorithms take on the biases that are already inside the data. People often think that machines are “fair and unbiased,” but machines are only as unbiased as the humans who create them and the data upon which it trains.

Foundation Models: Foundation models are trained on large datasets and can then be used for other purposes. Large language models (LLMs), such as employed by ChatGPT and similar systems, “are trained on large-scale text data and can generate human-like text” (NAIAC, 2023). Controversy surrounds foundation models since, depending on where their data comes from, different issues of trustworthiness and bias may arise.

Large Language Model: Large Language Models (LLMs) are artificial intelligence systems specifically designed to understand, generate, and interact with human language on a large scale. These models are trained on enormous datasets comprising a wide range of text sources, enabling them to grasp the nuances, complexities, and varied contexts of natural language. LLMs like GPT (Generative Pre-trained Transformer) use deep learning techniques, particularly transformer architectures, to process and predict text sequences, making them adept at tasks such as language translation, question-answering, content generation, and sentiment analysis. (UBC, n.d.)

Human-centered Perspective: A human-centered perspective sees AI systems trained to work in alignment with humans, helping to augment human skills.

Reinforcement Learning with Human Feedback (RLHF): RLHF is a “machine learning approach that combines reinforcement learning techniques, such as rewards and comparisons, with human guidance to train an artificial intelligence agent” (Patrizio, n.d.). After the model has been pre-trained, it undergoes human testing and feedback that are supported with a system of rewards. The AI system then uses the feedback and rewards to improve its performance. (Patrizio, n.d.) This method has stirred controversy due to the ways humans have been used to review genAI outputs.

Intelligence Augmentation (IA): Augmenting means making something greater; in some cases, perhaps it means making it possible to do the same task with less effort. It can also mean letting a human choose to not do all the redundant tasks but automate some of them so they can do more things that only a human can do. It may mean other things. There’s a fine line between augmenting and replacing, and technologies should be designed so that humans can choose what a system does and when it does it.

Interpretable Machine Learning (IML): Interpretable machine learning, sometimes also called interpretable AI, describes the creation of models that are inherently interpretable in that they provide their own explanations for their decisions. This approach is preferable to that of explainable machine learning (see definition below) for many reasons including the fact that we should understand what is happening from the beginning in our systems rather than try to “explain” black boxes after the fact (Rudin, 2019).

Black Boxes: We call things we don’t understand “black boxes” because what happens inside the box cannot be seen. Many machine learning algorithms are “black boxes” meaning that we don’t have an understanding of how a system is using features of the data when making their decisions (generally, we do know what features are used but not how they are used). Currently two primary ways to pull back the curtain on the black boxes of AI algorithms exist: interpretable machine learning (see definition above) and explainable machine learning (see definition below).

Machine Learning (ML): Machine learning is a field of study with a range of approaches to developing algorithms that can be used in AI systems. AI is the more general term. In ML, an algorithm will identify rules and patterns in the data without a human specifying those rules and patterns. These algorithms build a model for decision making as they go through data. (You will sometimes hear the term machine learning model.) Massive amounts of data are required to train the algorithms used in machine learning, so they can make decisions. Because they discover their own rules in the data they are given, ML systems can perpetuate biases.

In machine learning, the algorithm improves without the help of a human programmer. In most cases the algorithm is learning an association (when X occurs, it usually means Y) from past training data. Since the data is historical, it may contain biases and assumptions that we do not want to perpetuate. Many questions arise about involving humans in the loop with AI systems; when using ML to solve AI problems, a human may not be able to understand the rules the algorithm is creating and using to make decisions, leading to a black box situation. This could be especially problematic if a human was harmed by a decision a machine made and there was no way to appeal the decision.

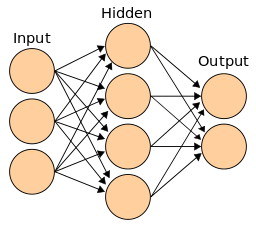

Neural Networks (NN): Neural networks also called artificial neural networks (ANN) are a subset of ML algorithms. They were inspired by the interconnections of neurons and synapses in a human brain. In a neural network, after data enter in the first layer, the data go through a hidden layer of nodes where calculations that adjust the strength of connections in the nodes are performed, and then go to an output layer as depicted in Figure 17.1.

Deep Learning: Deep learning models are a subset of neural networks. With multiple hidden layers, deep learning algorithms are potentially able to recognize more subtle and complex patterns. Like neural networks, deep learning algorithms involve interconnected nodes where weights are adjusted using more layers and more calculations that can make adjustments to the output to determine each decision. The decisions by deep learning models are often very difficult to interpret due to the many hidden layers that complete different calculations, which are not easily translatable into English rules (or another human-readable language).

Natural Language Processing (NLP): Natural Language Processing is a field of Linguistics and Computer Science that also overlaps with AI. NLP uses an understanding of the structure, grammar, and meaning in words to help computers “understand and comprehend” language. NLP requires a large corpus of text (usually half a million words) to work effectively.

NLP technologies help in many situations that include: scanning texts to turn them into editable text (optical character recognition), speech to text, voice-based computer help systems, grammatical correction (like auto-correct or Grammarly), summarizing texts, and more.

Conversational or Chat AI: Conversational AI or Chat AI refers to the branch of artificial intelligence focused on enabling machines to understand, process, and respond to human language in a natural and conversational manner. This technology underpins chat bots and virtual assistants, which are designed to simulate human-like conversations with users, providing responses that are contextually relevant and coherent. Conversational AI combines elements of natural language processing (NLP), machine learning (ML), and sometimes speech recognition to interpret and engage in dialogue. (UBC, n.d.)

Robots and Bots: Robots are embodied mechanical machines that are capable of doing a physical task for humans. “Bots” are typically software agents that perform tasks in a software application (e.g., in an intelligent tutoring system). Bots are sometimes called conversational agents when they can interact using natural language with humans (as in the case of chatbots). Both robots and bots can contain AI, including machine learning, but do not have to have it. AI can help robots and bots perform tasks in more adaptive and complex ways.

Explainable Machine Learning (XML) or Explainable AI (XAI): Researchers have developed a set of processes and methods that allow humans to better understand the results and outputs of machine learning algorithms. This helps developers of AI-mediated tools understand how the systems they design work and can help ensure that they work correctly and are meeting requirements and regulatory standards.

The term “explainable” in the context of explainable machine learning or explainable AI refers to an understanding of how a model works and not to an explanation of how the model works. In theory, explainable ML/AI means that an ML/AI model will be “explained” after the algorithm makes its decision so that we can understand how the model works. This often entails using another algorithm to help explain what is happening. One issue with XML and XAI is that we cannot know for certain whether the explanation we are getting is correct (hence the black box); therefore, we cannot technically trust either the explanation or the original model. Instead, researchers recommend the use of interpretable models (Rudin, 2019).

Tuning: Tuning describes the process of adjusting a pre-trained model to better suit a specific task or set of data. This involves modifying the model’s parameters so that it can more effectively process, understand, and generate information relevant to a particular application. Tuning is different from the initial training phase, where a model learns from a large, diverse dataset. Instead, it focuses on refining the model’s capabilities based on a more targeted dataset or specific performance objectives. (UBC, n.d.)

Note: The original version of this glossary was reviewed by Michael Chang, Ph.D., a CIRCLS postdoctoral scholar.

Glossary: Usage

Agent: An agent is a computer program or system that is designed to perceive its environment, make decisions and take actions to achieve a specific goal or set of goals. The agent operates autonomously, meaning it is not directly controlled by a human operator.

Anthropomorphizing: The tendency for humans to refer to or think of inanimate objects using human qualifiers is called anthropomorphizing. Given that genAI interacts with humans in a conversational way that is human-like, it is common for humans to refer to genAI tools using anthropomorphisms.

Bias: Bias is viewed in two distinct ways in relation to genAI:

Firstly, bias can refer to a systemic skew or prejudice in the AI model’s output, often reflecting inherent or learned prejudices in the data it was trained on. Bias in AI can manifest in various forms, such as cultural, gender, racial, political, or socioeconomic biases. These biases can lead to AI systems making decisions or generating content that is unfair, stereotypical, or discriminative in nature.

Secondly, in the technical construction of AI models, particularly neural networks, bias refers to a parameter that is used alongside “weights” to influence the output of a node in the network. While weights determine how much influence an input will have on a node, biases allow for an adjustment to the output independently of its inputs. The bias parameter is essential in tuning a model’s behaviour, as it provides the flexibility needed for the model to accurately represent complex patterns in the data. Without biases, a neural network might be significantly less capable of fitting diverse and nuanced datasets, limiting its effectiveness and accuracy.

Bot: In the context of Generative AI, a ‘bot’ (short for robot) typically refers to a software application that is programmed to perform automated tasks. These tasks can range from simple, repetitive activities to more complex functions involving decision-making and interactions with human users. They are often equipped with advanced capabilities such as understanding and generating language, responding to user queries, or creating content based on specific guidelines or prompts.

Certain tools such as ChatGPT and Poe allow you to create your own ‘bots’ (called GPTs in the case of OpenAI; a subscription is required).

Chat Bot: A chatbot is a software application designed to simulate conversation with human users, especially over the internet. It utilizes techniques from the field of natural language processing (NLP) and sometimes machine learning (ML) to understand and respond to user queries. Chatbots can range from simple, rule-based systems that respond to specific keywords or phrases with pre-defined responses, to more sophisticated AI-driven bots capable of handling complex, nuanced, and context-dependent conversations.

Critical AI: Critical AI is an approach to examining AI from a perspective that focuses on reflective assessment and critique as a way of understanding and challenging existing ethical and cultural structures within AI. Read more about critical AI (Ruiz & Fusco, 2024).

Experience Design/User Interface Design (UX/UI): User-experience/user-interface design refers to the overall experience users have with a product. These approaches are not limited to AI work. Product designers implement UX/UI approaches to study, understand, and design the experiences their users have with their technologies (Ruiz & Fusco, 2024).

Fine-tuning: Fine-tuning is the process of taking a pre-trained AI model and further training it on a specific, often smaller, dataset to adapt it to particular tasks or requirements. This is relevant in scenarios where a general AI model, trained on varied datasets, needs to be specialized or optimized for specific applications.

A general language model could be fine-tuned with academic papers and texts from a specific discipline to better understand and generate text relevant to that field. This process involves adjusting the model’s parameters slightly so that it better aligns with the nuances and terminologies of the target domain while retaining the broad knowledge it gained during initial training.

Fine-tuning offers a balance between the extensive learning of a large, general model and the specific expertise required for particular tasks.

GenAI Model vs GenAI Application: When we speak of the model, we are referring to the complex interconnections of algorithms and technologies that go into creating the system that powers the generation of output. When we speak of the tool or application, we are referring to the specific interface, such as ChatGPT or Bing Chat–which are both anchored to the generative pretrained transformer model (GPT) (Paul R MacPherson Institute for Leadership, Innovation and Excellence in Teaching, 2024).

Hallucinating: Hallucinations are incorrect or misleading results that AI models generate. These errors can be caused by a variety of factors, including insufficient training data, incorrect assumptions made by the model, or biases in the data used to train the model. The concept of AI hallucinations underscores the need for critical evaluation and verification of AI-generated information, as relying solely on AI outputs without scrutiny could lead to the dissemination of misinformation or flawed analyses.

Intelligent Tutoring Systems (ITS): A computer system or digital learning environment that gives instant and custom feedback to learners. An Intelligent Tutoring System may use rule-based AI (rules provided by a human) or machine learning in the underlying algorithms and code that an ITS is built with. ITSs, for example, can support adaptive learning in company professional development programs (Ruiz & Fusco, 2024).

Prompt Crafting and Prompt Engineering: Prompt engineering in the context of genAI refers to the creation of input prompts to effectively guide AI models, particularly those like Generative Pre-trained Transformers (GPT), in producing specific and desired outputs. This practice involves formulating and structuring prompts to leverage the AI’s understanding and capabilities, thereby optimizing the relevance, accuracy, and quality of the generated content. Prompt engineering is a process that is typically completed by specialists with the technical knowledge and skills that guide them in challenging the GPTs to achieve optimal outputs. See OpenAI’s Prompt Engineering page for more information.

More specifically, according to Carl G. (2023), “Prompt engineering refers to the process of designing and using structured prompts to generate text responses for a wide range of NLP tasks, such as language modelling, text classification, and question answering. Prompt engineering involves using design patterns and various techniques, such as one-shot and few-shot learning, to improve the accuracy and efficiency of the generated responses.”

GenAI end-users (i.e., the general public) often refer to their process of prompting as prompt engineering, but they are actually simply prompting, a term that will be used in this text to refer to the process of crafting prompts to elicit end user output from genAI systems. Remember “[p]rompt engineering is a technique used to generate responses to a wide range of NLP tasks, while prompting [crafting] is a technique used to elicit a specific type of response or information from a user or system” (Carl, 2023).

Tokens: Tokens are the smallest units of data that an AI model processes. In natural language processing (NLP), tokens typically represent words, parts of words (like syllables or sub-words), or even individual characters, depending on the tokenization method used. Tokenization is the process of converting text into these smaller, manageable units for the AI to analyze and understand.

When using AI tools such as ChatGPT, the platforms will often quote how much a query costs in terms of tokens. Also, many subscriptions for AI applications will be quoted according to token costs.

Knowledge Check

Attribution

Some content has been partially adapted from:

Ruiz, P., and Fusco, J. (2024, updated). Glossary of artificial intelligence terms for educators. Educator CIRCLS Blog. Glossary of Artificial Intelligence Terms for Educators – CIRCLS Used under a Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/).

University of British Columbia (UBC). Glossary of GenAI Terms – AI In Teaching and Learning (ubc.ca) CC by 4.0.

GenAI Use

Chapter review exercises were created with the assistance of CoPilot.

References

G., Carl. (2023, May 4). Prompt Engineering vs Prompting: Understanding the Differences and Applicability in NLP (linkedin.com)

Hashemi-Pour, C. and Lutkevich, B. (n.d.). What is Artificial General Intelligence? Definition from TechTarget Via Schroeder, 2024.

Keary, T. (2024, February 7 updated). What is Artificial Superintelligence (ASI)? Definition & Examples (techopedia.com)

NAIAC. (2023, August updated). What is a foundation model? FAQs on Foundation Models and Generative AI

OpenAI. (n.d.). Prompt engineering – OpenAI API

OpenAI. (2023). Planning for AGI and beyond (openai.com)

Patrizio, A. (2023, June updated). What is Reinforcement Learning? | Definition from TechTarget

Rudin, C. (2019). Stop explaining black box machine learning models for high stakes decisions and use

interpretable models instead. ArXiv. 1811.10154.pdf (arxiv.org)

Ruiz, P. (2023, November 10). Artificial Intelligence in Education: A Reading Guide Focused on Promoting Equity and Accountability in AI – CIRCLS

Schroeder, R. (2024, March 13). Artificial general intelligence and higher education (insidehighered.com)